The upcoming Christmas season gives us an opportunity to stop for a moment and reflect about our daily activities, also those within the ROMSOC program. Very often, we find traces of Christmas in the most unexpected places, including our projects.

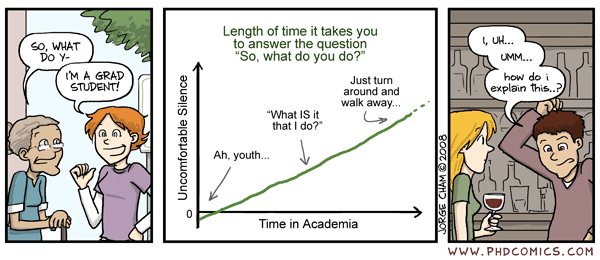

My name is Jonasz Staszek and I am currently working with Friedrich-Alexander-Universität Erlangen-Nürnberg as the ROMSOC ESR7 on a problem related strongly to the railways, in particular to the optimal employment of resources in cross-border rail traffic. In this blog entry, I would like to tell you a little about my project, the method I plan to use and the trace of Christmas in it.

The Problem

Citizens and residents of the European Union enjoy the right of freedom of movement and economic activity. Our companies also benefit from this while doing business abroad from their home country. In many cases though, both individuals and companies would not be able to benefit from these rights if appropriate technology, allowing mobility of people and goods, was not in place.

Numerous historical considerations are the source of various difficulties of railway carriers nowadays. Early on, each enterprise introducing railways chose the technology to suit its needs the best. Later in the 19th and 20th centuries, as railway companies were nationalized, each country made its own bold (and often costly) decisions about standardizing the railway system on its territory.

We face the consequences of such historical developments up until today and things are not likely to change in the near future, mainly due to enormous costs of such transformations. For example gauge, or the distance between rails on a track, is standardized nearly everywhere in Europe at 1435 mm, with the exception of Spain, Portugal, Finland and former USSR republics (1520/1524 mm). Another structural difference occurs with regard to power supply for electric locomotives – only in the EU we have five such systems. Yet another example could be drawn from all kinds of varying regulations in each individual state with regard to training and licensing of the drivers, mandatory safety systems installed on the network and in the locos, access to the railway network (e.g. a right to ride a train on a given section of the country’s network), etc.

Clearly, too many differences exist for an international railway carrier to be able to manage its resources centrally. Each country, with its specific railway system, usually has its own carrier(s), network manager(s) as well as driver training systems and safety regulations. Hence, international carriers usually have a separate entity to manage rail traffic on the territory of each respective country. Consequently, the decisions on the deployment of resources on certain trains are made in a dispersed fashion – by far and large each entity takes them on its own within the area of its responsibility. This could lead to a suboptimal use of the existing assets.

In my research, I intend to tackle exactly this problem – given the set of trains from point A to point B (not necessarily in the same country) ordered by the customers of a railway carrier and the existing resource base (locos and drivers), find the optimal (e.g. minimizing costs or maximizing utilization) attribution of assets to the connections to be carried out.

The solution approach

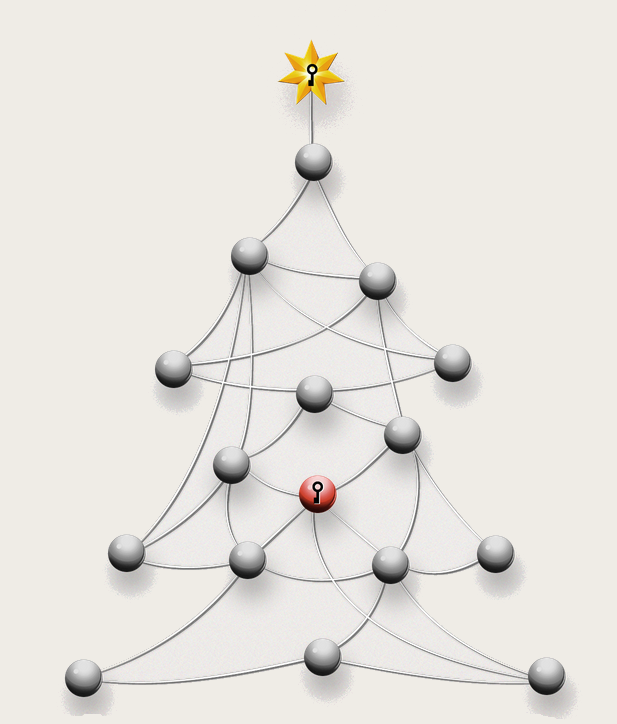

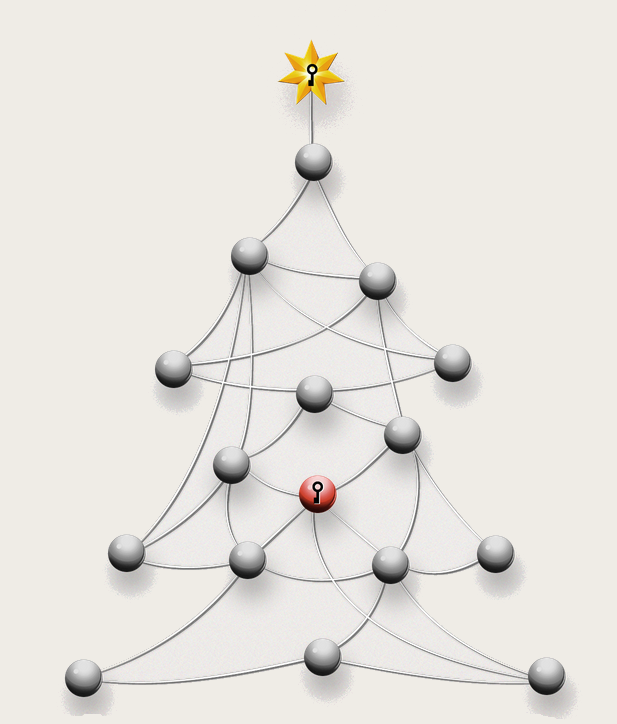

As the Christmas season is already very close, let me illustrate the way I intend to approach this problem with the use of a nice puzzle developed by my colleagues at FAU Erlangen-Nürnberg – please click here for the interactive version. This is a puzzle used in a competition organized by my chair every Christmas – for each correct solution a certain amount of money is transferred to a good cause.

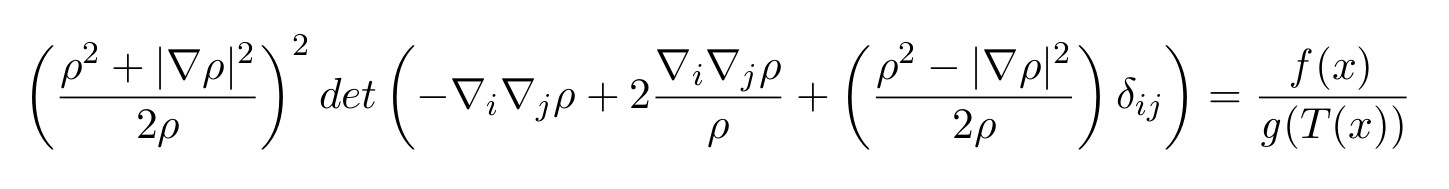

The task is about colouring the balls in blue, red or yellow in such a way that no two connected balls get the same colour. In mathematical terms, we are dealing with a graph colouring problem here – we have an undirected graph G = (V, E) with nodes and edges which needs to be coloured with the colours from the set C with colours. We already know the colour for two of the nodes. Hence, we are looking for an attribution of the colour to the nodes such that the following criteria are met:

- Each node v is coloured with exactly one colour:

- No neighbour is coloured with the same colour

\in&space;E,\,\forall&space;k\in&space;C.)

- is a binary variable:

To draw a connection between the Christmas tree and the resource allocation problem, we could think of the colours as the trains which need to run, the nodes as the resources and the edges as conflicts which prohibit the employment of a pair of resources on a given train (e.g. a driver not licensed for a given loco, a loco unable to operate on a given section of the network, etc.).

Such model formulations are frequently seen in the optimization of problems related to railways [1], [2], [3], [4]. They have primarily been used for train scheduling problems, e.g. helping dispatchers select the order in which trains should use platforms in a station. It is also popular as a modelling and optimization structure in other areas – it becomes increasingly useful as a method of optimizing the use of LTE transmission frequencies [5] [6].

After this small glimpse into my research, I wish you a blessed Christmas time this year, full of Lebkuchen (gingerbread) and Glühwein (mulled wine)!

References:

[1] Lusby, Richard M., et al. “Railway Track Allocation: Models and Methods.” OR Spectrum, vol. 33, no. 4, 2009, pp. 843–883., doi:10.1007/s00291-009-0189-0.

[2] Cardillo, Dorotea De Luca, and Nicola Mione. “k L-List λ Colouring of Graphs.” European Journal of Operational Research, vol. 106, no. 1, 1998, pp. 160–164., doi:10.1016/s0377-2217(98)00299-9.

[3] Billionnet, Alain. “Using Integer Programming to Solve the Train-Platforming Problem.” Transportation Science, vol. 37, no. 2, 2003, pp. 213–222., doi:10.1287/trsc.37.2.213.15250.

[4] Cornelsen, Sabine, and Gabriele Di Stefano. “Track Assignment.” Journal of Discrete Algorithms, vol. 5, no. 2, 2007, pp. 250–261., doi:10.1016/j.jda.2006.05.001.

[5] Tsolkas, Dimitris, et al. “A Graph-Coloring Secondary Resource Allocation for D2D Communications in LTE Networks.” 2012 IEEE 17th International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD), 2012, doi:10.1109/camad.2012.6335378.

es in rail traffic.

es in rail traffic.

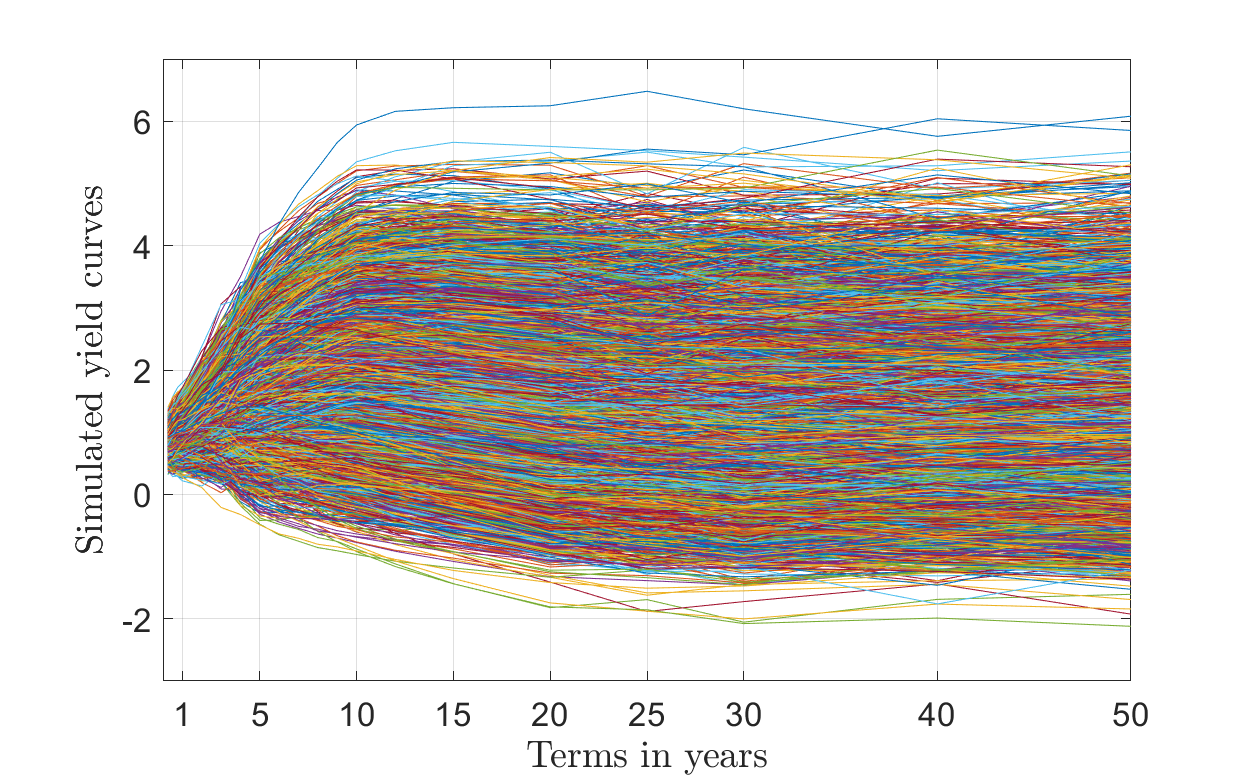

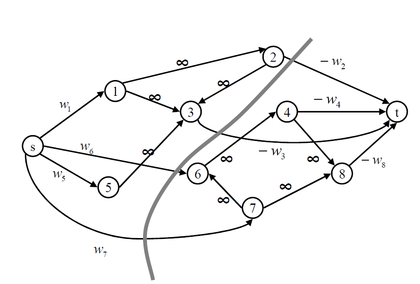

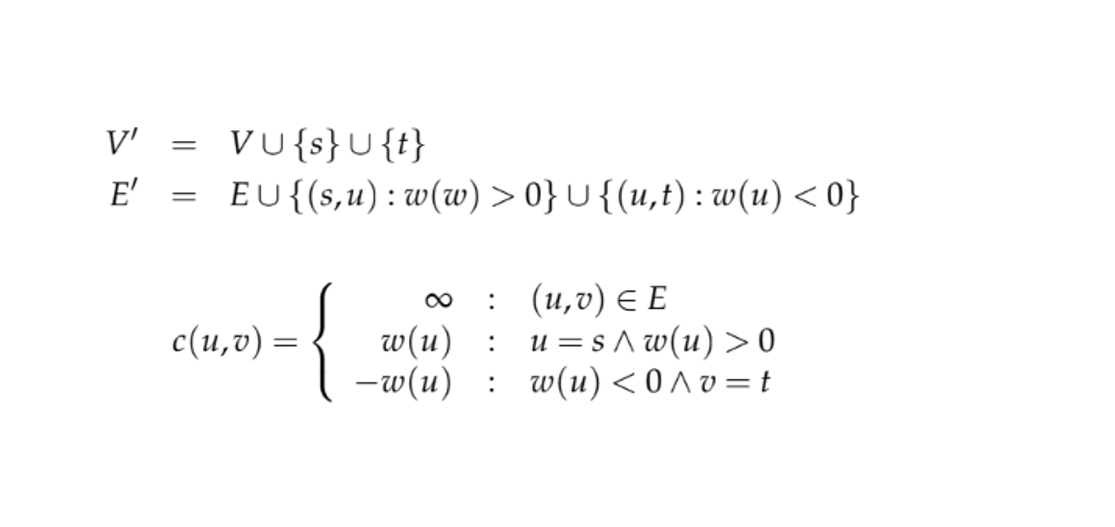

Surprisingly, it is possible to demonstrate that there is a bijection between the minimum cuts in this graph and the closures in

Surprisingly, it is possible to demonstrate that there is a bijection between the minimum cuts in this graph and the closures in  He is working at the University of Bremen (Germany) in collaboration with the industrial partner SagivTech (Israel) on the development of new concepts for data-driven approaches, based on neural networks and deep learning, for model adaptions under efficiency constraints with applications in Magnetic particle imaging (MPI) technology.

He is working at the University of Bremen (Germany) in collaboration with the industrial partner SagivTech (Israel) on the development of new concepts for data-driven approaches, based on neural networks and deep learning, for model adaptions under efficiency constraints with applications in Magnetic particle imaging (MPI) technology.